Abstract

This page contains the audio clips and corresponding figures as referred to in the paper:

Huzaifah M., Wyse L. (2019), Applying Visual Domain Style Transfer and Texture Synthesis Techniques to Audio - Insights and Challenges,

Neural Computing and Applications, DOI: 10.1007/s00521-019-04053-8.

Style transfer is a technique for combining two images based on the activations and feature statistics in a deep learning neural network

architecture. This paper studies the analogous task in the audio domain and takes a critical look at the problems that arise when adapting

the original vision-based framework to handle spectrogram representations. We conclude that CNN architectures with features based on 2D

representations and convolutions are better suited for visual images than for time-frequency representations of audio. Despite the awkward

fit, experiments show that the Gram-matrix determined “style” for audio is more closely aligned with timbral signatures without temporal

structure whereas network layer activity determining audio “content” seems to capture more of the pitch and rhythmic structures.

We shed insight on several reasons for the domain differences with illustrative examples. We motivate the use of several types of

one-dimensional CNNs that generate results that are better aligned with intuitive notions of audio texture than those based on existing

architectures built for images. These ideas also prompt an exploration of audio texture synthesis with architectural variants for

extensions to infinite textures, multi-textures, parametric control of receptive fields and the constant-Q transform as an

alternative frequency scaling for the spectrogram.

Accompanying code to generate the samples here can be found at this Github page.

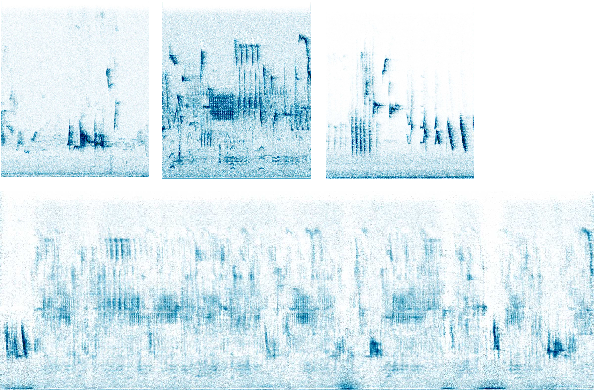

3. Stylization with spectrograms

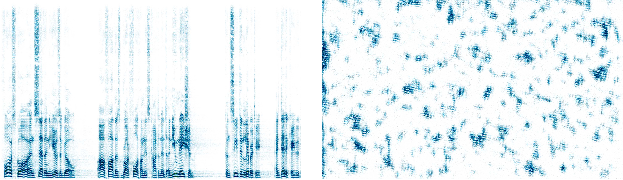

- [Left] Speech - Gettysburg address

- [Right] Synthesized 2D-CNN output (negative example)

Figure 1

Reference style image in the form of a spectrogram (left) and the synthesized texture processed using a 2D-CNN model (right). Texture synthesis done with a 2D convolutional kernel results in visual patterns that are similar to those for image style transfer but does not make much perceptual sense in the audio space, they do not carry any distinct notion of style or content. Our paper makes several suggestions to better align style transfer to the audio domain.

5.2.1. Examples of audio style transfer

- Speech - Gettysburg address

The content target for the following examples is speech:

- Speech - Japanese Female

- Piano - River Flows in You

- Crowing rooster

- Imperial March

Various style references were used:

- Gettysburg address + Japanese Female

- Gettysburg address + Piano

- Gettysburg address + Crowing rooster

- Gettysburg address + Imperial March

- For a contrast we swap style and content references:

Piano (content) + Gettysburg address (style)

And here are the results of audio style transfer:

5.2.2. Varying influence of style and content

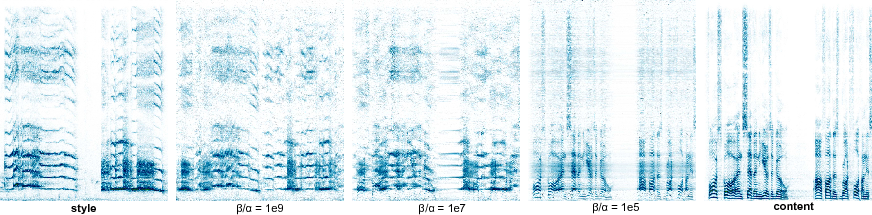

Figure 4

The columns show different relative weightings between style and content given by β/α (1e9, 1e7, 1e5), with the reference style on the left (crowing rooster) and reference content on the right (speech).

- [Left] Style: Crowing rooster

- [Right] Content: Speech - Gettysburg address

- [2nd from left] β/α = 1e9

- [Centre] β/α = 1e7

- [2nd from right] β/α = 1e5

5.2.3. Network weights and input initialization

- [Top] Style: Imperial March

- [Bottom] Content: Speech - The Rich and Famous

Figure 5

The reference style (top) and content (bottom) used for this experiment.

- (1) Random input + Random weights

- (2) Random input + Trained weights

- (3) Content input + Trained weights

- (4) Content input + Random weights

Figure 6

Synthesized hybrid image with different input and weight conditions. Clockwise from top left: (1) With random weights and initialization, the speech is clear with the style timbre sitting slightly more recessed in the background (2) It was still fairly problematic to get good results for trained weights and a random input. Hints of the associated style and content timbres could be picked up and seem well integrated but are very noisy. The output also loses any clear long term temporal structure (3) Trained weights and input content generated a sample sound fairly similar to (1) with a greater presence of noise and artefacts (4) Random weights plus input content seem to result in the most integrated features of both style and content.

5.3.1. Infinite textures

- Speech - Gettysburg address extended from 10s to 30s

- Hen clucking extended from 5s to 15s

- Waves extended from 5s to 15s

Textures can be arbitrarily extended beyond the duration of the reference style:

5.3.2. Multi-texture synthesis

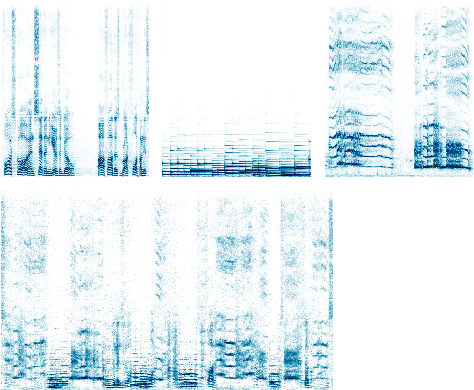

Figure 8

The extended multi-texture bird sound sample that was synthesized (bottom) from its 3 constituent clips (top). Prominent spectral structures from the component sounds can be seen in the output spectrogram although there are overlaps with other frequencies.

- [Top left] Texture source 1: Speech - Gettysburg address

- [Top centre] Texture source 2: Piano - River Flows in You

- [Top right] Texture source 3: Crowing rooster

- [Bottom] Multi-texture synthesized output:

Crowing piano's address (Speech + Piano + Rooster)

Figure 9

The multi-texture output (bottom) from 3 distinct sounds, speech, piano and rooster (top) that may be described as uncharacteristic for textures since they are fairly dynamic in the long term. In contrast to Fig. 8, the synthesis is not as integrated largely because the constituent sounds have very different spectral properties, resulting in stark transitions between them.

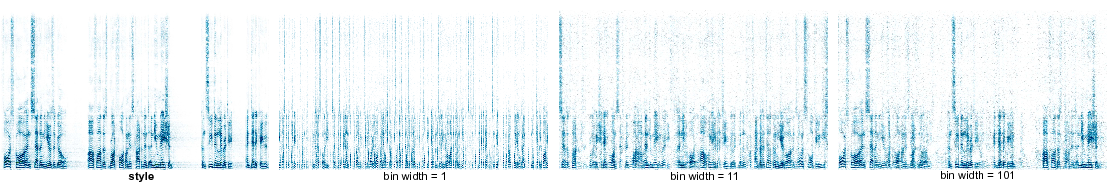

5.3.3. Controlling time and frequency ranges of textural elements

Figure 10

The corresponding spectrogram outputs for an increasing kernel width over time bins (1, 11, 101) and the style reference (speech) on the left. The effect of the varying kernel width is apparent from the spectrograms as bigger slices of the original signal is captured in time with a larger receptive field. The same experiment was repeated for a piano sound, samples of which can be heard on the right.

- [Left] Style: Speech - Gettysburg address

- [2nd from left] Synthesized texture with time bin width = 1

- [2nd from right] Synthesized texture with time bin width = 11

- [Right] Synthesized texture with time bin width = 101

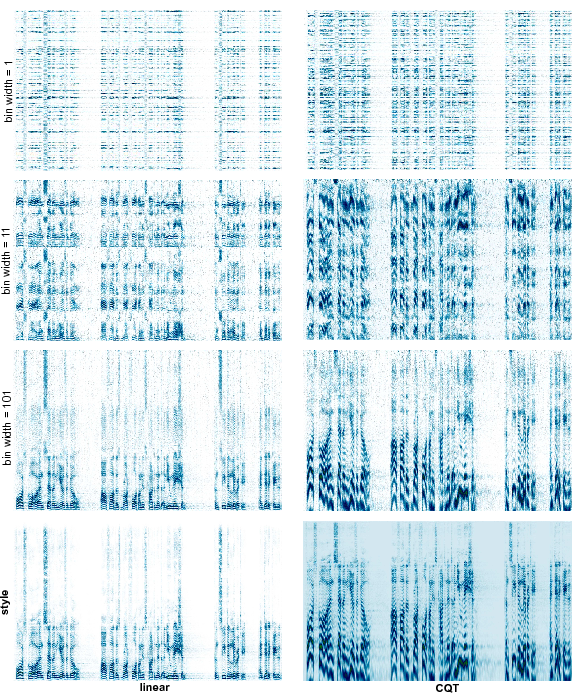

5.3.4. Frequency scaling

Figure 11

Synthesized textures generated from linearly-scaled STFT

spectrograms (left column) and CQT spectrograms (right column).

The style references (speech) are shown at the bottom.

Gradually lengthening the kernel width (1, 11, 101 bins) in

the re-oriented 1D-CNN results in capturing more of the frequency

spectrum within the kernel for both the linear-STFT and CQT

spectrograms, although there are subtle differences in the

corresponding audio reconstructions.

- [Bottom left] Style: Speech - Gettysburg address

- [Top left] Synthesized texture with (STFT)

freq bin width = 1

- [2nd from top left] Synthesized texture with (STFT)

freq bin width = 11

- [2nd from bottom left] Synthesized texture with (STFT)

freq bin width = 101

The following are the STFT-based texture synthesis results

corresponding to the left column in Fig. 11:

- [Bottom right] Style: Speech - Gettysburg address

- [Top right] Synthesized texture with (CQT)

freq bin width = 1

- [2nd from top right] Synthesized texture with (CQT)

freq bin width = 11

- [2nd from bottom right] Synthesized texture with (CQT)

freq bin width = 101

The following are the CQT-based texture synthesis results corresponding to the right column in Fig. 11: