(a) |

(b) |

(c) |

Most of the sounds used in this paper come from the Syntex collection of datasets. Each dataset is generated by a different synthesis algorithm, and each file in a set is generated by sampling parameters of the algorithm. The dataset names are mnemonic and suggestive of the sound and types of parameters the algorithms expose (and not meant to make any claims about the “realism” of sounds named). Here we describe how the various datasets in the collection are generated. The source code is also available at the syntex web site.

A basic frequency modulation algorithm:

Parameters:

Note: The texture-param datasets of this paper: FM-mf, FM-mi, and FM-cf, were prepared using this algorithm.

Wind is constructed starting with a normally distributed white noise source followed by a 5th-order low-pass filter with a cutoff frequency of 400 Hz. This is followed by a band pass filter with time-varying center frequency (“cf”) and gain, and a constant bandwidth value.

The variation (cf and gain of the bandpass filter) is determined by a 1-dimensional simplex noise signal that is bounded (before scaling) in and band-limited (we use the OpenSimplex python library).

The simplex noise generator takes a frequency argument linearly proportional to the “gustiness” parameter for the sound. A “howliness” parameter controls the bandpass filter width parameter. A “strength” parameter controls the average frequency around which the cf of the wind gusts fluctuate.

Parameters:

Note: The texture-param datasets of this paper: wind-gust, wind-howl, and wind-strength, were prepared using this algorithm.

Five different “chimes” ring at average rates that are a function of wind strength (wind also plays in the background for this sound). Each chime is constructed from 5 exponentially decaying sinusoidal signals with frequency, amplitude, and decay rates based on the empirical data reported in [1]. A “chimeSize” parameter for this sound scales all the chime frequencies.

A simplex noise signal is computed for each chime based on the wind “strength” as described for DS_Wind_1.0 above. Zero-crossings in the simplex wave cause the corresponding chime to ring at an amplitude proportional to the derivative of the simplex signal at the zero crossing.

Parameters:

Note: The texture-param datasets of this paper: windchimes-size, windchimes-strength, were prepared using this algorithm.

This sound is based on the “Tapping 1-2” on sound from [2] paper on texture perception. The original sound consists of 10 regularly spaced pairs of taps over seven seconds with the second tap coming one quarter of the way through the repeating cycle period. DS_Tapping1.2_1.0 resynthesises this sound with parameters for the cycle period and for the phase in the cycle of the second tap.

Parameters:

Note: The texture-param datasets of this paper: tapping-rate, and tapping-relphase, were prepared using this algorithm.

Roughly imitative of a group of bees buzzing and moving around in a small space. Each bee buzz is created with an asymmetric triangle wave with an average center frequency in the vicinty of 200 Hz. The buzz source is followed by some formant-like filtering. Bees move toward and away from the listener based on a 1-dimensional simplex noise signal controlled by a frequency parameter for the simplex noise generator, and a maximum and minimum distance. This motion creates some variation in the buzzing frequency and amplitude due to the Doppler effect and amplitude roll-off with squared distance. These parameters are all fixed (the simplex frequency parameter is 2 Hz, the minimum and maximum distances are 2 and 10 meters.

There are two parameters systematically varied for the experiments in this paper. One is the center frequency of a Gaussian distribution from which each bee’s average center frequency is drawn.

Buzzes also have a “micro” variation in frequency following a 1-D simplex noise signal parameterized with a frequency argument of 14 Hz. The “busybodyFreqFactor” controls the excursion of these micro variations by multiplying the [-1,1] simplex noise signal to get frequency variation in octaves.

Parameters:

Note: The texture-param datasets of this paper: bees-cf, and bees-busy, were prepared using this algorithm.

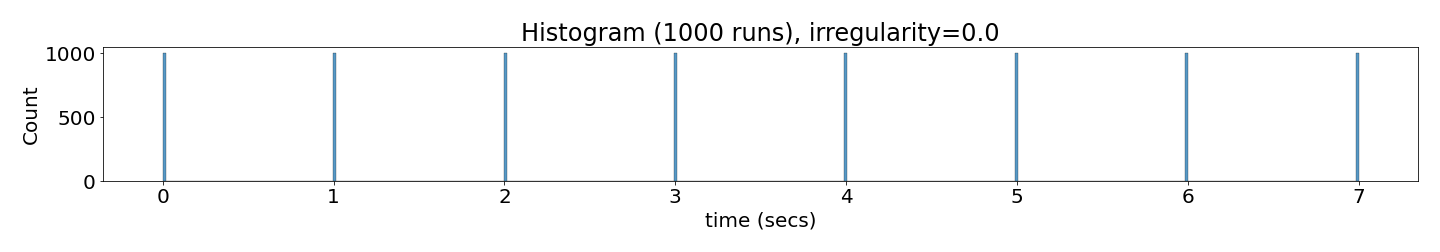

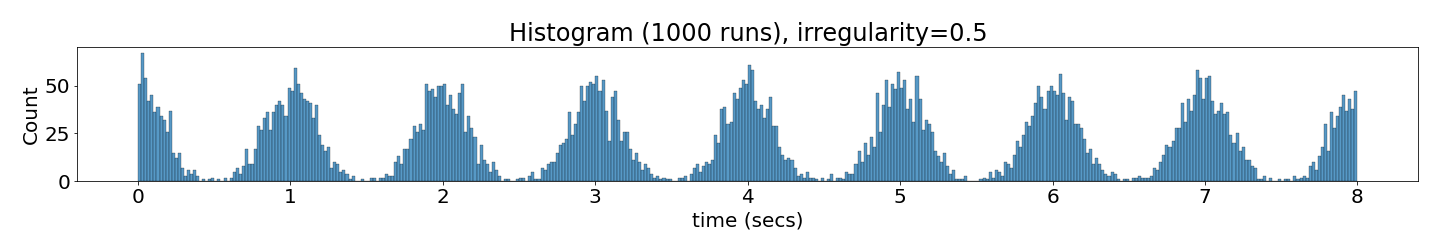

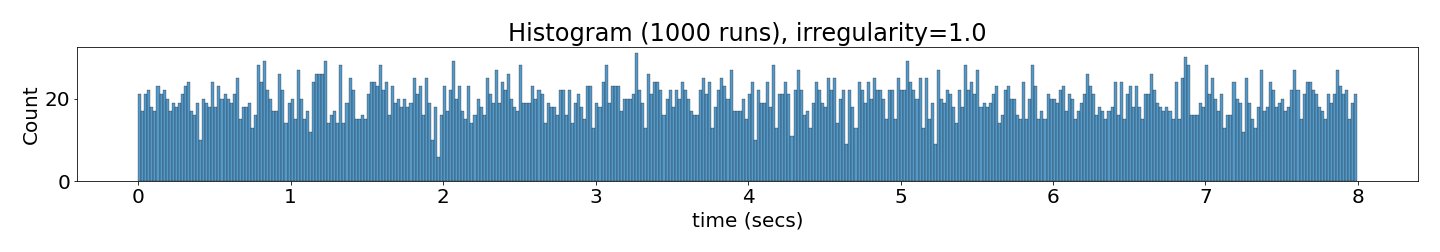

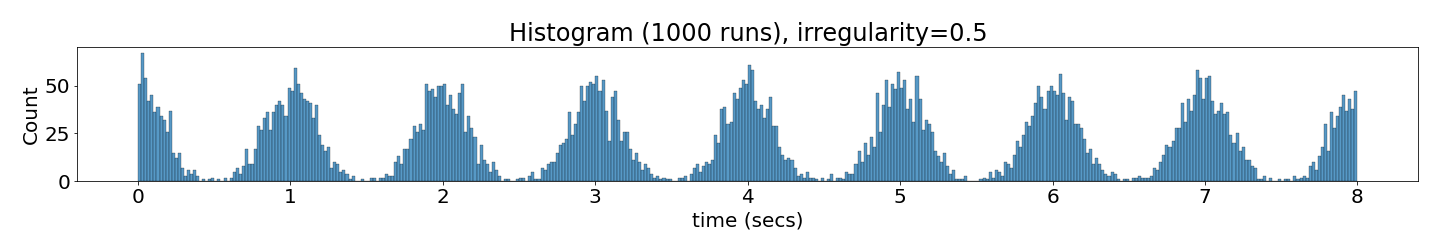

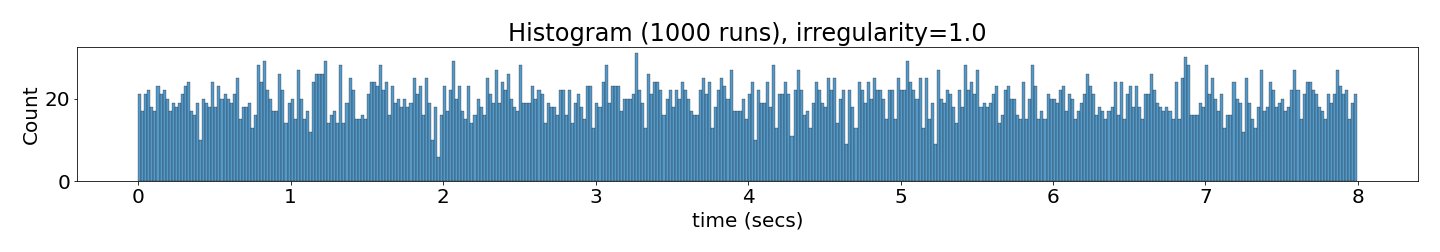

Chirps are frequency sweeps of a pitched tone with 3 harmonics (frequencies at [0, 1, and 2] times the fundamental). They have a center frequency (expressed in octaves relative to 400 Hz, see below) drawn from a Gaussian distribution, a duration, and move linearly in octaves. Chirps occur with an average number of events per second (eps), and can be spaced regularly (identically) in time, or irregularly according to a parameter (“irreg_exp”) (See Figure 1).

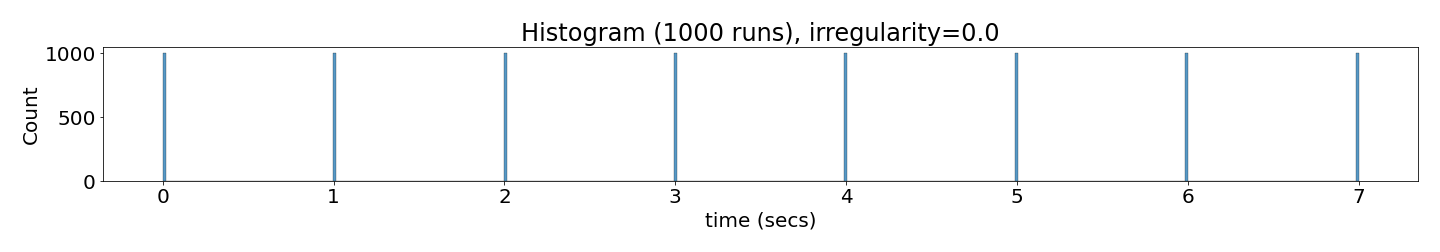

(a) |

(b) |

(c) |

Parameters:

Note: The texture-param datasets of this paper: chirps-rate, chirps-cf, and chirps-irreg, were prepared using this algorithm.

Uniformly distributed (“white”) noise signal comb filtered using feed back delay:

Parameters:

Note: The texture-param dataset of this paper: fbnoise-pitchedness, was prepared using this algorithm.

Pops are generated by a brief noise burst (3 uniformly distributed random noise samples in [-1,1]) followed by a narrow bandpass filter with a center frequency drawn from a narrow Gaussian distribution.

Parameters:

Note: The texture-param datasets of this paper: pop-rate, pop-cf, and pop-irreg, were prepared using this algorithm.

Individual claps are generated using the Pops model (above) but with noise bursts of 45 samples followed by band pass filters uniformly distributed in [800, 1400] Hz. Clapper sequences are generated with inter-clap intervals normally and narrowly distributed around .5 secs with slight periodic irregularity (Figure 1 to avoid unrealistic alignment between different clappers. Reverb3 is used to create a basic room characteristic.

Parameters:

We used three recorded datasets that have reasonably accurate labels for systematic parameter variation.

The NSynth data set [3] contains over 300K musical notes from over 1K instruments systematically sampled and labeled over their respective ranges of chromatic pitch values (as well as other qualities). We used a subset consisting of one octave of 13 chromatic pitches for a brass instrument, with amplitude scaling at 10 different values.

Parameters:

Note: The texture-param dataset of this paper: nsynth-pitch, refers to this dataset.

This data set was recorded by filling a bucket with water poured from another bucket. The bucket was metal and had a capacity of 2.5 gallons. It was (repeatedly) filled at an approximately constant rate over a duration of 30 seconds. The transient sounds at the beginning and end of each sound was trimmed, and then sound was divided into 11 equally spaced time points used as the starting point of a 2-second excerpt labeled with one of 11 different “fill levels” in [0,1]. Variations for different fill levels come from the multiple fillings. Parameters:

Note: The texture-param dataset of this paper: water-fill, refers to this dataset.

The Amen Break is a loop of the first 2 bars of the famous and often-sampled drum break played by Gregory Coleman of the Winstons on the track, ”Amen Brother.” Variations in speed (without pitch change) were made using Audacity4 signal processing tools at intervals of one semitone.

Parameters:

Note: The texture-param datasets of this paper: drum-tempo and drum-rev, refer to this dataset.

[1] Teemu Lukkari and V Valimaki. Modal synthesis of wind chime sounds with stochastic event triggering. In Proceedings of the 6th Nordic Signal Processing Symposium, 2004. NORSIG 2004., pages 212–215. IEEE, 2004.

[2] Josh H McDermott and Eero P Simoncelli. Sound texture perception via statistics of the auditory periphery: evidence from sound synthesis. Neuron, 71(5):926–940, 2011.

[3] Jesse Engel, Cinjon Resnick, Adam Roberts, Sander Dieleman, Mohammad Norouzi, Douglas Eck, and Karen Simonyan. Neural audio synthesis of musical notes with wavenet autoencoders. In International Conference on Machine Learning, pages 1068–1077. PMLR, 2017.